A practical introduction to how LLMs work, what tokens are, and the limits developers must understand before building with AI.

Large Language Models feel magical at first, but building reliable features with them requires understanding a few key concepts. This post gives developers a practical mental model of how LLMs work—without diving into research papers.

What an LLM Actually Is

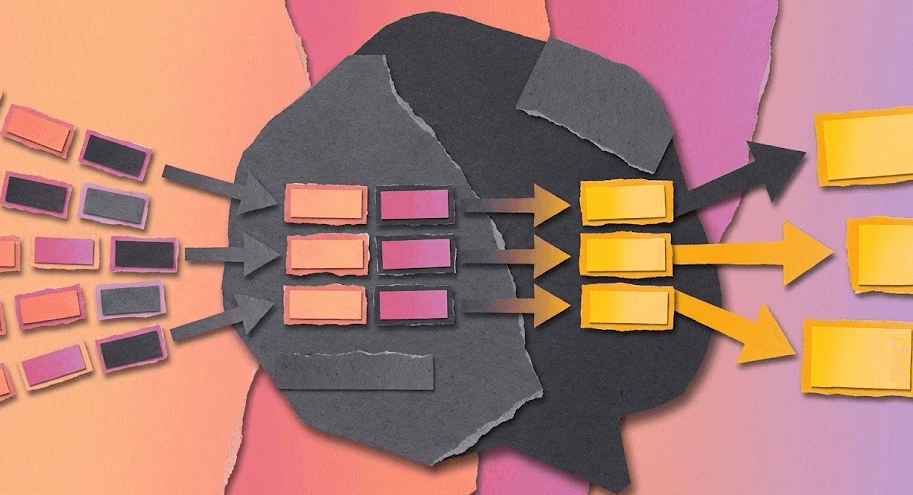

A Large Language Model is a predictive system trained on text. It doesn't "think" or "understand" the way humans do. Instead, it guesses the next most likely token based on your prompt and its training data.

A helpful way to think about it:

Prompt → Model predicts next token → Repeats → Output response

This means the model's output is shaped entirely by the instructions and context you give it.

Tokens: The Real Currency of AI

LLMs process tokens, not characters or words. Tokens are small chunks like:

- "hello"

- "ing"

- "("

- "user_name"

Every request you send is broken into tokens, and you pay for:

- Input tokens (the prompt)

- Output tokens (the response)

If you want to control cost and performance, you must control token length. Cutting a 3,000-token prompt to 500 tokens can reduce cost by ~80%.

Context Windows: The Model's Short-Term Memory

Each model has a context window—the maximum number of tokens it can hold during a conversation or request.

If you exceed it:

- The earliest tokens get pushed out

- The model "forgets" earlier context

- Output becomes less accurate

This explains why long chats drift or forget details.

Why Prompts Matter More Than You Expect

LLMs rely heavily on clear instructions. Small changes in phrasing can produce completely different responses.

Example:

Vague: "Write code for a login system."

Better: "Write a TypeScript example of a secure login route using Next.js API routes and JWTs."

Good prompts reduce errors, shorten responses, and save tokens.

Model Limitations Developers Must Know

LLMs are powerful but not perfect:

- They hallucinate—confidently produce incorrect information

- They don't verify facts

- They are sensitive to ambiguous instructions

Understanding these limitations helps you design safer, predictable features.

Key Takeaways

- LLMs predict tokens—not truth—and rely entirely on your prompt

- Tokens impact cost, speed, and quality

- Context windows limit how much the model can "remember"

- Clear prompts produce better, cheaper responses

- LLMs hallucinate, so outputs must be validated for critical use cases

With these basics in place, you can start integrating AI more confidently into real-world applications.